Check the filesets:

labserver101:/usr/es/sbin/cluster > lslpp -l grep rsct

labserver101:/usr/es/sbin/cluster > lslpp -l grep cluster

labserver101 /etc/hosts :

16.138.184.177 labserver101

16.138.184.171 labserver101_svc

10.1.1.1 labserver101_boot

11.1.1.1 labserver101_stdby

16.138.184.181 labserver201

10.1.1.2 labserver201_boot

11.1.1.2 labserver201_stdby

labserver201 /etc/hosts :

16.138.184.177 labserver101

16.138.184.171 labserver101_svc

10.1.1.1 labserver101_boot

11.1.1.1 labserver101_stdby

16.138.184.181 labserver201

10.1.1.2 labserver201_boot

11.1.1.2 labserver201_stdby

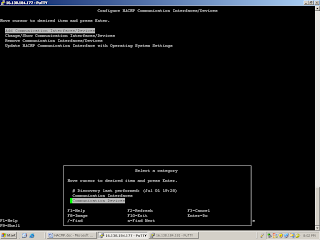

From Labserver101 - > Smitty hacmp -> Extend Configuration -- Define the Cluster name

RG Name : saprg

Participating nodes : labserer101 labserver201

Enhanced concurrent Vg creation From Labserver101

labserver101:/usr/es/sbin/cluster > lspv grep hdisk1

hdisk1 0005e8e07d6b9991 None

labserver101:/usr/es/sbin/cluster > lspv grep 0005e8e07d6b9991

hdisk1 0005e8e07d6b9991 None

labserver201:/usr/es/sbin/cluster > lspv

hdisk0 0005e8d2c756518f rootvg active

hdisk1 0005e8e07d6b9991 None

labserver201:/usr/es/sbin/cluster > lspv grep 0005e8e07d6b9991

hdisk1 0005e8e07d6b9991 None

labserver101:/usr/es/sbin/cluster > lsvg

rootvg

testvg

labserver101:/usr/es/sbin/cluster > lsvg -o

rootvg

labserver101:/usr/es/sbin/cluster > ls -l /dev/hdisk1

brw------- 1 root system 17, 1 Jul 01 18:55 /dev/hdisk1

labserver201:/dev > ls -l /dev/hdisk1

brw------- 1 root system 17, 1 Jun 29 14:45 /dev/hdisk1

Create the file system /oracle in testvg

Lv01 -> /oracle

labserver101:/usr/es/sbin/cluster/utilities > ./cltopinfo

Cluster Name: hpcluster

Cluster Connection Authentication Mode: Standard

Cluster Message Authentication Mode: None

Cluster Message Encryption: None

Use Persistent Labels for Communication: No

There are 2 node(s) and 1 network(s) defined

NODE labserver101:

Network net_ether_01

labserver101_svc 16.138.184.171

labserver101_boot 10.1.1.1

labserver101_stdby 11.1.1.1

NODE labserver201:

Network net_ether_01

labserver101_svc 16.138.184.171

labserver201_boot 10.1.1.2

labserver201_stdby 11.1.1.2

Resource Group saprg

Startup Policy Online On Home Node Only

Fallover Policy Fallover To Next Priority Node In The List

Fallback Policy Fallback To Higher Priority Node In The List

Participating Nodes labserver101 labserver201

Service IP Label labserver101_svc

labserver101:/usr/es/sbin/cluster > lssrc -ls clstrmgrES

Current state: ST_STABLE

sccsid = "@(#)36 1.135.1.82 src/43haes/usr/sbin/cluster/hacmprd/main.C, hacmp.pe, 52haes_r541, 0747B_hacmp541 11/13/07 10:40:55"

i_local_nodeid 0, i_local_siteid -1, my_handle 1

ml_idx[1]=0

There are 0 events on the Ibcast queue

There are 0 events on the RM Ibcast queue

CLversion: 9

local node vrmf is 5410

cluster fix level is "0"

The following timer(s) are currently active:

Current DNP values

DNP Values for NodeId - 0 NodeName - labserver101

PgSpFree = 0 PvPctBusy = 0 PctTotalTimeIdle = 0.000000

DNP Values for NodeId - 0 NodeName - labserver201

PgSpFree = 0 PvPctBusy = 0 PctTotalTimeIdle = 0.000000